Current Research Projects in the CCL

XGFabric: Coupling Sensor Networks and HPC Facilities with Advanced Wireless Networks for Near Real-Time Simulation of Digital Agriculture (DOE ASCR)

PIs: Shantenu Jha and Ozgur Kilic (Brookhaven National Lab), Rich Wolski (University of California - Santa Barbara), Douglas Thain (University of Notre Dame), and Mehmet Can Vuran (University of Nebraska-Lincoln)

XGFabric is one of several projects recently awarded by the U.S. Department of Energy

for exploratory research in extreme scale science.

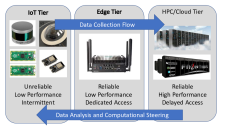

This project will explore the design of systems for connecting remote wireless sensor networks with high performance computing systems. The driving applications are in digital agriculture, where extensive sensors are already used to observe weather, growing conditions, and the current state of crops. However, exploiting this data to improve agriculture in a timely way is challenging, due to energy and computing limitations of remote settings. Only a high performance computing (HPC) center has the capability to run the simulations needed to predict micro-weather and recommend interventions. This project will develop XGFabric, a novel software system that will allow complex workflows to span remote 5G/6G sensor networks, edge computers, and powerful HPC facilities, enabling timely access to powerful computing capabilities from remote agricultural sites.

XGFabric is one of several projects recently awarded by the U.S. Department of Energy

for exploratory research in extreme scale science.

This project will explore the design of systems for connecting remote wireless sensor networks with high performance computing systems. The driving applications are in digital agriculture, where extensive sensors are already used to observe weather, growing conditions, and the current state of crops. However, exploiting this data to improve agriculture in a timely way is challenging, due to energy and computing limitations of remote settings. Only a high performance computing (HPC) center has the capability to run the simulations needed to predict micro-weather and recommend interventions. This project will develop XGFabric, a novel software system that will allow complex workflows to span remote 5G/6G sensor networks, edge computers, and powerful HPC facilities, enabling timely access to powerful computing capabilities from remote agricultural sites.

SADE: A Safety-Aware Ecosystem of Reputable sUAS

PI: Jane Cleland-Huang (U Notre Dame)

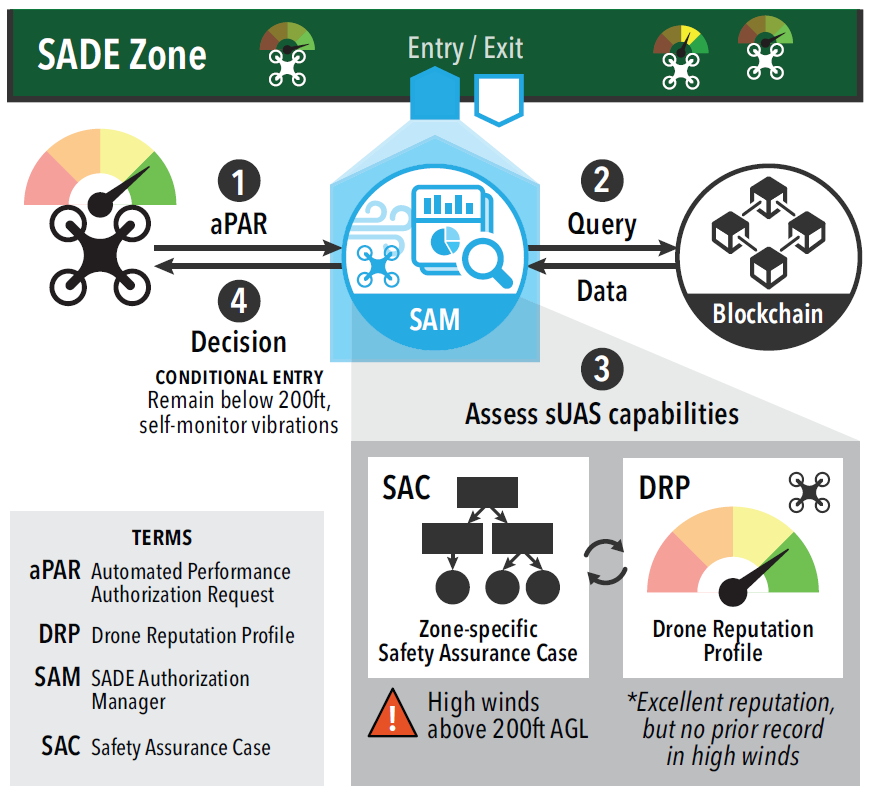

The SADE project is a large collaborative undertaking across the University of Notre Dame, Iowa State University, St Louis University, University of Texas El Paso, and DePaul University. The overall goal of the project is to develop technology

for safety zones that permit only trusted drones to operate in congested or sensitive

airspace. The CCL is contributing to this project with the development of complex

simulation infrastructure that will allow for in-silico testing of drone control software.

This infrastructure includes flight controllers (PX4), physical simulators (Gazebo)

gaming technology (Unreal), and other services all dynamically deployed in HPC infrastructure. This allows developers of drone control software to evaluate the correctness of

systems at scale before deploying to real-world scenarios.

The SADE project is a large collaborative undertaking across the University of Notre Dame, Iowa State University, St Louis University, University of Texas El Paso, and DePaul University. The overall goal of the project is to develop technology

for safety zones that permit only trusted drones to operate in congested or sensitive

airspace. The CCL is contributing to this project with the development of complex

simulation infrastructure that will allow for in-silico testing of drone control software.

This infrastructure includes flight controllers (PX4), physical simulators (Gazebo)

gaming technology (Unreal), and other services all dynamically deployed in HPC infrastructure. This allows developers of drone control software to evaluate the correctness of

systems at scale before deploying to real-world scenarios.

Currently hiring a postdoctoral scholar to work on this project!

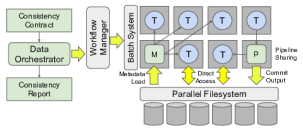

Pledge: Accelerating Data Intensive Scientific Workflows with Consistency Contracts

PI: Douglas Thain (U Notre Dame)

Advanced discovery in scientific computing increasingly depends upon the successful

execution of complex workflows that make use of a shared filesystem. We observe

that workflows rarely need the full power of global sequential consistency. Rather,

they tend to follow several common I/O patterns that can be characterized at a high

level. Rather than depend upon the filesystem to "figure things out" at the last

minute, we propose consistency contracts as a means of expressing the I/O

intentions of complex workflows. With a contract in hand, the runtime system can

then perform a variety of optimizations that exploit the internal storage and I/O

capacity of the cluster as a whole.

Advanced discovery in scientific computing increasingly depends upon the successful

execution of complex workflows that make use of a shared filesystem. We observe

that workflows rarely need the full power of global sequential consistency. Rather,

they tend to follow several common I/O patterns that can be characterized at a high

level. Rather than depend upon the filesystem to "figure things out" at the last

minute, we propose consistency contracts as a means of expressing the I/O

intentions of complex workflows. With a contract in hand, the runtime system can

then perform a variety of optimizations that exploit the internal storage and I/O

capacity of the cluster as a whole.

NBFlow: From Notebook to Workflow and Back Again (CSSI Framework)

PIs: Douglas Thain, Kevin Lannon, Tanu Malik (De Paul U), and Shaowen Wang (U Illinois)

NBFlow is our newest CSSI project that brings together

interactive notebook technologies (Jupyter), with reproducibility tools (SciUnit), and distributed workflows (TaskVine).

The combined result will be a new system (NBFlow) that makes it easy to execute large scale workflows on thousands of

cluster nodes, through the familiar interface of the executable notebook. While easily stated, this presents a number

of challenges to reconcile the high throughput but unpredictable nature of clusters with the immediate feedback expected by notebooks.

We will develop a number of new techniques to convert notebooks into workflows, execute them reliably on the cluster,

and then return them back again to the user. This project is a collaboration with Kevin Lannon (U Notre Dame), Tanu Malik (DePaul U), and Shaowen Wang (U Illinois).

NBFlow is our newest CSSI project that brings together

interactive notebook technologies (Jupyter), with reproducibility tools (SciUnit), and distributed workflows (TaskVine).

The combined result will be a new system (NBFlow) that makes it easy to execute large scale workflows on thousands of

cluster nodes, through the familiar interface of the executable notebook. While easily stated, this presents a number

of challenges to reconcile the high throughput but unpredictable nature of clusters with the immediate feedback expected by notebooks.

We will develop a number of new techniques to convert notebooks into workflows, execute them reliably on the cluster,

and then return them back again to the user. This project is a collaboration with Kevin Lannon (U Notre Dame), Tanu Malik (DePaul U), and Shaowen Wang (U Illinois).

TaskVine: A User Level Framework for Data Intensive Scientific Applications (CSSI Element)

PI: Douglas Thain

![]() TaskVine is open source software for building large scale data intensive dynamic workflows that run on HPC clusters, GPU clusters, and commercial clouds. As tasks access external data sources and produce their own outputs, more and more data is pulled into local storage on workers. This data is used to accelerate future tasks and avoid re-computing exisiting results. Data gradually grows "like a vine" through the cluster. By using a variety of pioneering techniques

such as distant futures, distributed provenance, and graph pruning, TaskVine is able to ourperform traditional distributed computing techniques.

It has been used to build large scale applications in scientific fields such as high energy physics, bioinformatics, molecular dynamics, and machine learning. We continue to develop new techniques within TaskVine, and extend it use to new applications.

TaskVine is open source software for building large scale data intensive dynamic workflows that run on HPC clusters, GPU clusters, and commercial clouds. As tasks access external data sources and produce their own outputs, more and more data is pulled into local storage on workers. This data is used to accelerate future tasks and avoid re-computing exisiting results. Data gradually grows "like a vine" through the cluster. By using a variety of pioneering techniques

such as distant futures, distributed provenance, and graph pruning, TaskVine is able to ourperform traditional distributed computing techniques.

It has been used to build large scale applications in scientific fields such as high energy physics, bioinformatics, molecular dynamics, and machine learning. We continue to develop new techniques within TaskVine, and extend it use to new applications.

Selected publications in this area: (see all papers)

- Accelerating Function-Centric Applications by Discovering, Distributing, and Retaining Reusable Context in Workflow Systems

- Adaptive Task-Oriented Resource Allocation for Large Dynamic Workflows on Opportunistic Resources

- Maximizing Data Utility for HPC Python Workflow Execution

- TaskVine: Managing In-Cluster Storage for High-Throughput Data Intensive Workflows

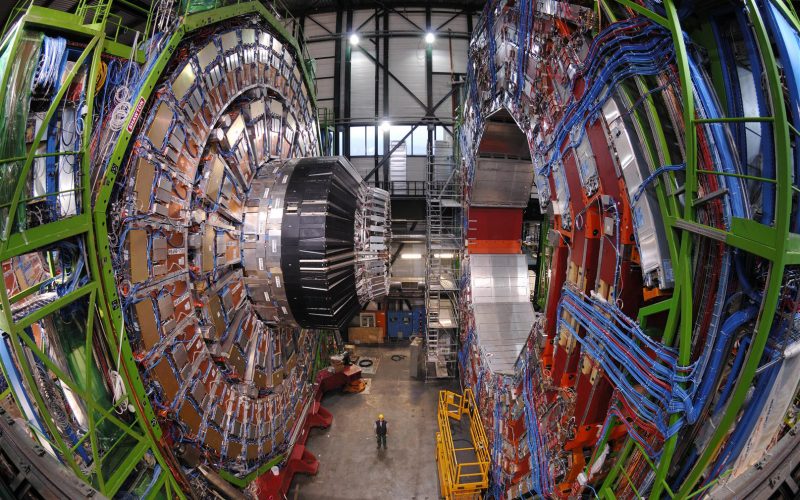

Scalable Data Analysis Applications for High Energy Physics

PIs: Douglas Thain and Kevin Lannon

For more than 10 years, we have collaborated with Prof. Kevin Lannon and the CMS physics group at Notre Dame to design and build large scale data analysis applications that interpret data produced by the Compact Muon Solenoid detector at CERN. These applications are both interesting and challenging from a computer science

perspective, because they must consume large quantities of data (Terabytes to Petabytes), scale up to thousands of nodes in clusters, and yet also remain

reliable and responsive to the end user. Our latest work makes use of the TaskVine framework

along with software such as Dask and Coffea as the foundation

to create a variety of custom applications, including Lobster, TopEFT, DV4, RS-Triphoton, and more. We continue to innovate at the interface

between computer science and physical science.

For more than 10 years, we have collaborated with Prof. Kevin Lannon and the CMS physics group at Notre Dame to design and build large scale data analysis applications that interpret data produced by the Compact Muon Solenoid detector at CERN. These applications are both interesting and challenging from a computer science

perspective, because they must consume large quantities of data (Terabytes to Petabytes), scale up to thousands of nodes in clusters, and yet also remain

reliable and responsive to the end user. Our latest work makes use of the TaskVine framework

along with software such as Dask and Coffea as the foundation

to create a variety of custom applications, including Lobster, TopEFT, DV4, RS-Triphoton, and more. We continue to innovate at the interface

between computer science and physical science.

Selected publications in this area: (see all papers)

- Reshaping High Energy Physics Applications for Near-Interactive Execution Using TaskVine

- Dynamic Task Shaping for High Throughput Data Analysis Applications in High Energy Physics

- Analysis Cyberinfrastructure: Challenges and Opportunities

- Scaling Data Intensive Physics Applications to 10k Cores on Non-Dedicated Clusters with Lobster

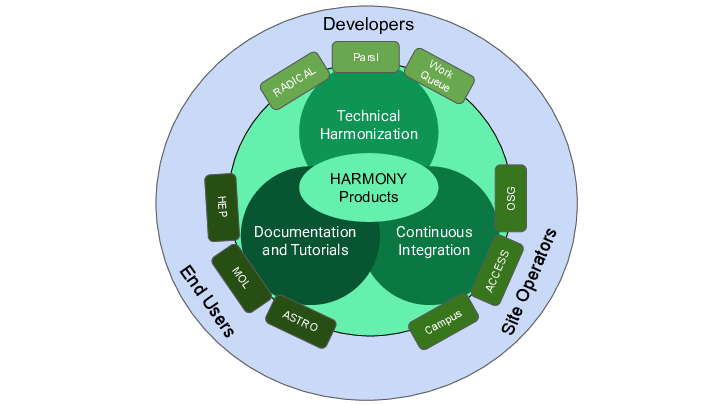

POSE Phase I: HARMONY: Harmonizing the High Performance Python Workflow Ecosystem

PIs: Douglas Thain (Notre Dame), Kyle Chard (U-Chicago), and Shantenu Jha (BNL)

Workflow management is important to effectively and productively deploying complex computations, e.g., combining chains of dependent and complex tasks such as data gathering, data processing, simulation, training, inference, validation, and visualization. This Pathways to Open-Source Ecosystems (POSE) project seeks to "harmonize" Python programming language based workflow management, build sustainability, and better support complex computational workflows, both in research and commercial environments. Harmony will deliver a software ecosystem that is used by some of the most impactful science projects, from understanding the beginning of the universe to discovering new therapeutics for viruses. This Phase I project will engage in ecosystem discovery, organization and governance, and community building to build the Harmony Open-Source Ecosystem (OSE).Both small and large scale science require workflows to deliver science results. The Harmony OSE will provide new capabilities to integrate, interoperate, and interchange components to create high performance workflows. The project will lower the barrier to implement sophisticated workflows and manage their execution at scale all from a familiar Python interface. Harmony will bring together the community to consider how efforts can be more tightly integrated and managed and how an OSE can benefit all stakeholders.

Workflow management is important to effectively and productively deploying complex computations, e.g., combining chains of dependent and complex tasks such as data gathering, data processing, simulation, training, inference, validation, and visualization. This Pathways to Open-Source Ecosystems (POSE) project seeks to "harmonize" Python programming language based workflow management, build sustainability, and better support complex computational workflows, both in research and commercial environments. Harmony will deliver a software ecosystem that is used by some of the most impactful science projects, from understanding the beginning of the universe to discovering new therapeutics for viruses. This Phase I project will engage in ecosystem discovery, organization and governance, and community building to build the Harmony Open-Source Ecosystem (OSE).Both small and large scale science require workflows to deliver science results. The Harmony OSE will provide new capabilities to integrate, interoperate, and interchange components to create high performance workflows. The project will lower the barrier to implement sophisticated workflows and manage their execution at scale all from a familiar Python interface. Harmony will bring together the community to consider how efforts can be more tightly integrated and managed and how an OSE can benefit all stakeholders.

Completed Research Projects

VC3: Virtual Clusters for Community Computation

DASPOS: Data and Software Preservation for Open Science

CAREER: Data Intensive Grid Computing on Active Storage Clusters

HECURA: Data Intensive Abstractions for High End Biometric Applications

Filesystems for Grid Computing

Sub Identities: Practical Containment for Distributed Systems

Debugging Grids with Machine Learning Techniques

TeamTrak: A Testbed for Cooperative Mobile Computing