Work Queue is a framework for building large distributed applications that span thousands of machines drawn from clusters, clouds, and grids. Work Queue applications are written in Python, Perl, or C using a simple API that allows users to define tasks, submit them to the queue, and wait for completion. Tasks are executed by a general worker process that can run on any available machine. Each worker calls home to the manager process, arranges for data transfer, and executes the tasks. A wide variety of scheduling and resource management features are provided to enable the efficient use of large fleets of multicore servers. The system handles a wide variety of failures, allowing for dynamically scalable and robust applications.

Who Uses Work Queue?

Work Queue has been used to write applications that scale from a handful of workstations up to tens of thousands of cores running on supercomputers. Examples include the Parsl workflow system, the Coffea analysis framework, the the Makeflow workflow engine, SHADHO, Lobster, NanoReactors, ForceBalance, Accelerated Weighted Ensemble, the SAND genome assembler, and the All-Pairs and Wavefront abstractions. The framework is easy to use, and has been used to teach courses in parallel computing, cloud computing, distributed computing, and cyberinfrastructure at the University of Notre Dame, the University of Arizona, the University of Wisconsin, and many other locations.Learn About Work Queue

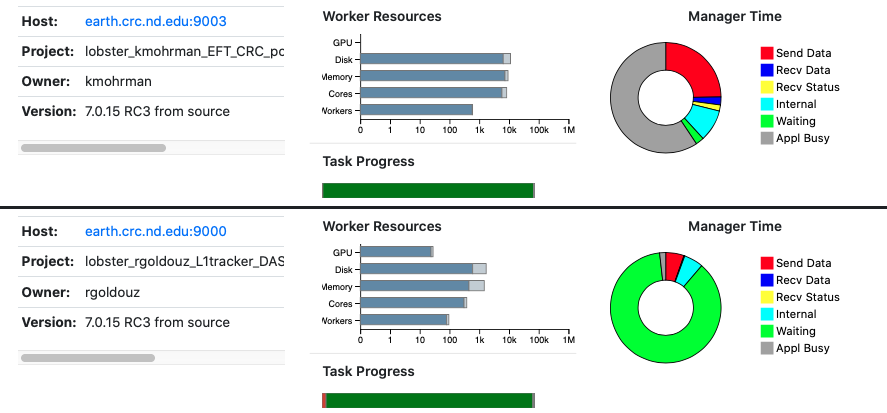

Online Status Display

Online Introduction to Work Queue

Publications

(Showing papers with tag workqueue. See all papers instead.)

|

Ben Tovar, Ben Lyons, Kelci Mohrman, Barry Sly-Delgado, Kevin Lannon, and Douglas Thain, |

|

Thanh Son Phung, Logan Ward, Kyle Chard, and Douglas Thain, |

|

Benjamin Tovar, Brian Bockelman, Michael Hildreth, Kevin Lannon, and Douglas Thain, |

|

Tim Shaffer, Zhuozhao Li, Ben Tovar, Yadu Babuji, TJ Dasso, Zoe Surma, Kyle Chard, Ian Foster, and Douglas Thain, |

|

Chao Zheng, Nathaniel Kremer-Herman, Tim Shaffer, and Douglas Thain, |

|

Nick Hazekamp, Ben Tovar, and Douglas Thain, |

|

Nathaniel Kremer-Herman, Benjamin Tovar, and Douglas Thain, |

|

Nicholas Hazekamp, Upendra Kumar Devisetty, Nirav Merchant, and Douglas Thain, |

|

Jeffrey Kinnison, Nathaniel Kremer-Herman, Douglas Thain, Walter Scheirer, |

|

Benjamin Tovar, Rafael Ferreira da Silva, Gideon Juve, Ewa Deelman, William Allcock, Douglas Thain, and Miron Livny, |

|

Daniel (Yue) Zhang, Charles (Chao) Zheng, Dong Wang, Doug Thain, Chao Huang, Xin Mu, Greg Madey, |

|

Dinesh Rajan and Douglas Thain, |

|

Matthias Wolf and Anna Woodard and Wenzhao Li and Kenyi Hurtado Anampa and Benjamin Tovar and Paul Brenner and Kevin Lannon and Mike Hildreth and Douglas Thain, |

|

Peter Ivie and Douglas Thain, |

|

Anna Woodard, Matthias Wolf, Charles Mueller, Nil Valls, Ben Tovar, Patrick Donnelly, Peter Ivie, Kenyi Hurtado Anampa, Paul Brenner, Douglas Thain, Kevin Lannon and Michael Hildreth, |

|

Charles (Chao) Zheng and Douglas Thain, |

|

Anna Woodard, Matthias Wolf, Charles Nicholas Mueller, Ben Tovar, Patrick Donnelly, Kenyi Hurtado Anampa, Paul Brenner, Kevin Lannon, and Michael Hildreth, |

|

Badi Abdul-Wahid, Haoyun Feng, Dinesh Rajan, Ronan Costaouec, Eric Darve, Douglas Thain, and Jesus A. Izaguirre, |

|

Andrew Thrasher, Zachary Musgrave, Brian Kachmark, Douglas Thain, and Scott Emrich, |

|

Olivia Choudhury, Nicholas L. Hazekamp, Douglas Thain, Scott Emrich, |

|

Michael Albrecht, Dinesh Rajan, Douglas Thain, |

|

Dinesh Rajan, Andrew Thrasher, Badi Abdul-Wahid, Jesus A Izaguirre, Scott Emrich, and Douglas Thain, |

|

Christopher Moretti, Andrew Thrasher, Li Yu, Michael Olson, Scott Emrich, and Douglas Thain, |

|

Badi Abdul-Wahid, Li Yu, Dinesh Rajan, Haoyun Feng, Eric Darve, Douglas Thain, Jesus A. Izaguirre, |

|

Andrew Thrasher, Zachary Musgrave, Douglas Thain, Scott Emrich, |

|

Peter Bui, Dinesh Rajan, Badi Abdul-Wahid, Jesus Izaguirre, Douglas Thain, |

|

Dinesh Rajan, Anthony Canino, Jesus A Izaguirre, and Douglas Thain, |

|

Irena Lanc, Peter Bui, Douglas Thain, and Scott Emrich, |

|

Li Yu, Christopher Moretti, Andrew Thrasher, Scott Emrich, Kenneth Judd, and Douglas Thain, |

|

Douglas Thain and Christopher Moretti, |

|

Peter Bui, Li Yu and Douglas Thain, |

|

Christopher Moretti, Michael Olson, Scott Emrich, and Douglas Thain, |

|

Li Yu, Christopher Moretti, Scott Emrich, Kenneth Judd, and Douglas Thain, |